What is Fine-Tuning?

This article will examine the idea of fine-tuning, its significance, how it is carried out, the benefits it offers, and the challenges it presents, particularly in the field of machine learning.

Following are the topics we are going to explore:

- What is Fine-Tuning?

- Why is Fine-Tuning Important?

- How Does Fine-Tuning Work?

- Step-by-Step Approach to Implement Fine-Tuning

- Difference Between Fine Tuning and Transfer Learning

- Benefits of Fine-Tuning

- Challenges of Fine-Tuning

- Applications of Fine-Tuning in Deep Learning

- Case Studies of Fine-Tuning

- Wrapping Up

Watch this Data Science Tutorial:

{

“@context”: “https://schema.org”,

“@type”: “VideoObject”,

“name”: “Data Science Course | Data Science Training | Data Science Tutorial for Beginners | Intellipaat”,

“description”: “What is Fine-Tuning?”,

“thumbnailUrl”: “https://img.youtube.com/vi/osHjb7QhgWk/hqdefault.jpg”,

“uploadDate”: “2023-08-24T08:00:00+08:00”,

“publisher”: {

“@type”: “Organization”,

“name”: “Intellipaat Software Solutions Pvt Ltd”,

“logo”: {

“@type”: “ImageObject”,

“url”: “https://intellipaat.com/blog/wp-content/themes/intellipaat-blog-new/images/logo.png”,

“width”: 124,

“height”: 43

}

},

“embedUrl”: “https://www.youtube.com/embed/osHjb7QhgWk”

}

What is Fine-Tuning?

Fine-tuning is a machine-learning technique that involves making targeted adjustments to a pre-trained model in order to improve its performance on a specific task. Instead of starting over, fine-tuning uses the knowledge and features the model learned during training. The model can adjust its knowledge to better match the task’s quality and demands through fine-tuning. Training a model using this process is more efficient and often yields better results than starting from scratch.

Let us explore the example that will help you understand fine-tuning in a better way:

Fine-tuning machine learning model uses a pre-trained model, designed for image classification, and adapting it to differentiate between cows, horses, and humans. This process includes freezing early layers that capture general features and adjusting later layers to learn task-specific distinctions. Training with a labeled dataset of images allows the model to specialize in recognizing these three classes. Validation and testing ensure accurate performance. Fine-tuning enhances the model’s ability to distinguish between the specified subjects by building upon its initial knowledge from pre-training.

Enroll in Intellipaat’s data science certification course and make your career in data science!

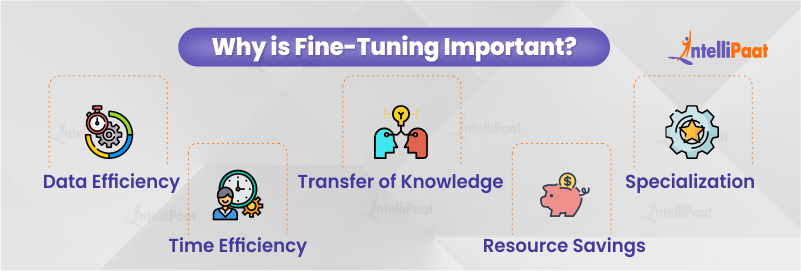

Why is Fine-Tuning Important?

Fine-tuning holds significance within machine learning due to a variety of compelling factors. A selection of these reasons is outlined as follows:

- Data Efficiency: Fine-tuning allows for effective model adaptation with limited task-specific data. Instead of collecting and annotating a new dataset, you can use existing, pre-trained models and optimize them for your task. This saves time and resources.

- Transfer of Knowledge: Pre-trained models have already learned valuable features and patterns from vast datasets during their initial training. Fine-tuning allows this acquired knowledge to be transferred to your specific task. It enables your model to start from a point of enhanced understanding.

- Specialization: Fine-tuning lets you customize a model to excel at a specific task. It’s like tailoring a versatile suit to fit an individual. By adjusting the model’s settings, you create a tool that works great in specific situations.

- Time Efficiency: Creating a model from scratch takes a long time, especially for deep and complex designs. Fine-tuning speeds up the process, as the model begins with already learned features. It reduces the time needed for convergence.

- Resource Savings: Training deep learning models demands considerable computational resources. Fine-tuning is resource-efficient as it leverages existing models and necessitates less extensive training.

How Does Fine-Tuning Work?

Fine-tuning begins by choosing a pre-trained model that has been trained on a large and diverse dataset. This model serves as a starting point with learned features and representations. Then, prepare your task-specific dataset. This dataset should be relevant to your target task and ideally include labeled examples for supervised tasks. The dataset should be organized in a way that aligns with the input format the pre-trained model expects.

Depending on the nature of your task, you might need to modify the architecture of the pre-trained model. This could involve adjusting the number of output units in the final layer for classification tasks or modifying layers to suit specific input types.

During fine-tuning, you usually start by freezing the initial layers of the pre-trained model. These layers capture general features that are relevant to many tasks. You then gradually unfreeze higher layers and fine-tune them as you progress through training. This approach prevents the model from losing its learned general features while adapting to task-specific features.

Then, define an appropriate loss function for your task. This could be cross-entropy for classification tasks, mean squared error for regression, etc. Choose an optimizer and set hyperparameters like learning rate and batch size. After this, train the modified model using your task-specific dataset. As you train, the model’s parameters are adjusted to better fit the new task while retaining the knowledge it gained from the initial pre-training.

Monitor the model’s performance on a validation dataset. This helps you prevent overfitting and make necessary adjustments to hyperparameters. Evaluate the fine-tuned model on an unseen test dataset to assess its real-world performance. This step ensures that the model generalizes well beyond the training data.

After training, evaluate the fine-tuned model on an unseen test dataset to assess its real-world performance. This step ensures that the model generalizes well beyond the training data. Fine-tuning might require multiple iterations of adjusting hyperparameters, layers, and training strategies to achieve the best results.

Prepare for interviews with this guide to data science interview questions!

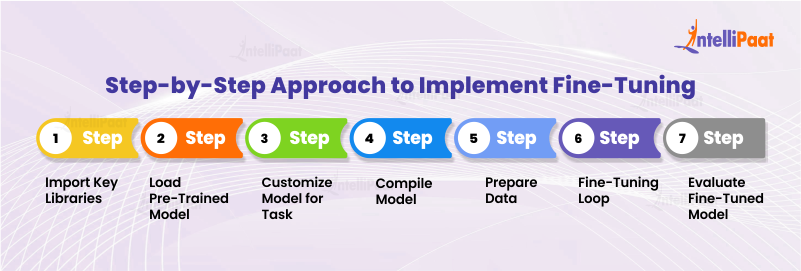

Step-by-Step Approach to Implement Fine-Tuning

Here is a simple way to fine-tune a pre-trained Convolutional Neural Network (CNN) for image classification.

Step 1: Import Key Libraries

import tensorflow as tf

from tensorflow.keras.applications import VGG16

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

Step 2: Load Pre-Trained Model

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

Step 3: Customize Model for Task

for layer in base_model.layers:

layer.trainable = False

x = GlobalAveragePooling2D()(base_model.output)

output = Dense(num_classes, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=output)

Step 4: Compile Model

model.compile(optimizer=Adam(lr=0.001), loss='categorical_crossentropy', metrics=['accuracy'])

Step 5: Prepare Data

train_generator = ... # Prepare your training data generator

val_generator = ... # Prepare your validation data generator

Step 6: Fine-Tuning Loop

epochs = 10

history = model.fit(

train_generator,

epochs=epochs,

validation_data=val_generator

)

Step 7: Evaluate Fine-Tuned Model

test_generator = ... # Prepare your test data generator

test_loss, test_accuracy = model.evaluate(test_generator)

print(f"Test Accuracy: {test_accuracy}")

The basic approach is shown above. It demonstrates how to fine-tune a pre-trained VGG16 model for image classification.

Difference Between Fine Tuning and Transfer Learning

Here’s a tabular comparison between fine-tuning and transfer learning:

| Aspect | Fine-Tuning | Transfer Learning |

| Definition | Adjusting a pre-trained model for a specific task | Using knowledge from one task to improve another task |

| Process | Adjusts and adapts specific layers of the model | Employs the learned knowledge to another task |

| Training Data | Typically requires task-specific data | Uses data from the source task |

| Extent of Changes | Modifies only a subset of model’s parameters | May involve modifying architecture or model |

| Starting Point | Pre-trained model | Pre-trained model |

| Complexity | Generally less complex | Might involve more complex adjustments |

Check out our blog on data science tutorial to learn more about it.

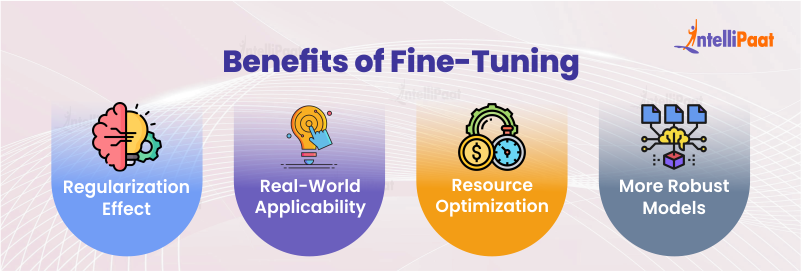

Benefits of Fine-Tuning

Fine-tuning offers several benefits that make it a valuable technique in machine learning:

- Regularization Effect: The pre-trained model acts as a form of regularization, preventing overfitting on small datasets. This is especially helpful when training deep models with limited data.

- Real-World Applicability: Fine-tuned models often perform better in real-world scenarios. They can capture domain-specific patterns, leading to more accurate predictions and outputs.

- Resource Optimization: Utilizing pre-trained models and fine-tuning them requires fewer resources compared to training models from scratch. This resource efficiency is crucial in industries with resource constraints.

- More Robust Models: Fine-tuned models tend to exhibit greater resilience against variations in the data. This is due to the fact that the pre-trained model has already acquired the ability to generalize effectively to novel data.

Challenges of Fine-Tuning

While fine-tuning is a powerful technique, it comes with a few challenges:

Overfitting

Fine-tuning a small dataset can lead to overfitting. The model might perform well on the training data but fail to generalize to new data. Regularization techniques are necessary to mitigate this risk.

Catastrophic Forgetting

When fine-tuning involves updating many layers, the model might lose its previously learned knowledge, leading to a phenomenon called catastrophic forgetting. Strategies like gradual unfreezing can help ease this issue.

Task-Specific Data

Fine-tuning requires task-specific data, and the availability of labeled data can be a challenge, especially for niche or specialized tasks.

Hyperparameter Tuning

Selecting appropriate hyperparameters, such as learning rate, batch size, and regularization strength, is crucial for successful fine-tuning. Incorrect choices can lead to suboptimal results.

Applications of Fine-Tuning in Deep Learning

Fine-tuning is a versatile technique that finds applications across various domains in deep learning. Here are some notable applications:

- Image Classification: Fine-tuning pre-trained convolutional neural networks (CNNs) for image classification tasks is common. Models like VGG, ResNet, and Inception are fine-tuned on smaller datasets to adapt to specific classes or visual styles.

- Object Detection: Fine-tuning is used to adapt pre-trained object detection models, such as Faster R-CNN or YOLO, to new object classes or datasets, enabling accurate object localization and recognition.

- Semantic Segmentation: Fine-tuning is applied to pre-trained models like U-Net or DeepLab for pixel-level semantic segmentation tasks, allowing these models to excel in segmenting specific objects or features in images.

- Transfer Learning in NLP: Pre-trained language models like BERT, GPT, and RoBERTa are fine-tuned for various natural language processing (NLP) tasks such as text classification, named entity recognition, sentiment analysis, and question answering.

Case Studies of Fine-Tuning

Below, we will provide you with a high-level overview along with sample code snippets for three case studies.

Fine-Tuning for Medical Image Analysis

Medical image analysis plays a crucial role in diagnosing and treating various diseases. Fine-tuning pre-trained models in this domain can significantly enhance the accuracy and efficiency of diagnoses.

Scenario: Diagnosing Skin Cancer

Challenge: Accurate identification of skin cancer from dermatoscopic images requires a deep understanding of complex patterns.

Approach: Fine-tune a pre-trained convolutional neural network (CNN) for skin lesion classification.

Code:

# Import necessary libraries import tensorflow as tf from tensorflow.keras.applications import VGG16 from tensorflow.keras.layers import Dense, GlobalAveragePooling2D from tensorflow.keras.models import Model from tensorflow.keras.optimizers import Adam # Load pre-trained VGG16 model base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3)) # Freeze all layers in the base model for layer in base_model.layers: layer.trainable = False # Add custom classification layers x = GlobalAveragePooling2D()(base_model.output) x = Dense(128, activation='relu')(x) output = Dense(num_classes, activation='softmax')(x) # Create the fine-tuned model model = Model(inputs=base_model.input, outputs=output) # Compile the model model.compile(optimizer=Adam(lr=0.001), loss='categorical_crossentropy', metrics=['accuracy']) # Fine-tune on skin lesion dataset history = model.fit(train_generator, epochs=10, validation_data=val_generator)

Benefits: The model, having learned common features from a broader image dataset, can detect intricate patterns in skin lesions. This aids dermatologists in making early and accurate diagnoses.

Text Generation Using Pre-Trained Language Models

Generating coherent and contextually relevant text is a complex task that benefits from leveraging pre-trained language models and fine-tuning them for specific use cases.

Scenario: Generating Legal Documents

Challenge: Drafting complex legal documents requires adherence to specific language styles and terminology.

Approach: Fine-tune a pre-trained language model on a legal corpus containing legal contracts, statutes, and case law documents.

Code:

# Import necessary libraries

import transformers

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Load pre-trained GPT-2 model and tokenizer

model_name = 'gpt2'

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

# Fine-tune the model on legal text dataset

legal_text = open("legal_corpus.txt", "r").read()

input_ids = tokenizer.encode(legal_text, return_tensors="pt")

# Train the model

model.train(input_ids)

Benefits: The fine-tuned model can produce legally accurate and coherent text. It saves time for legal professionals and reduces the chances of errors in legal documents.

Video Action Recognition with Transfer Learning

Recognizing actions or activities within videos is a challenging task that can be improved by leveraging transfer learning and fine-tuning techniques.

Scenario: Recognizing Sports Actions

Challenge: Identifying specific actions within a sports video, such as a soccer player scoring a goal or a basketball player making a slam dunk.

Approach: Fine-tune a pre-trained 3D convolutional neural network (C3D) on a dataset of sports videos, adapting it to the nuances of different sports and actions.

Code:

# Import necessary libraries

import tensorflow as tf

from tensorflow.keras.applications import InceptionV3

from tensorflow.keras.layers import Dense, GlobalAveragePooling3D

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

# Load pre-trained InceptionV3 model with 3D convolutional layers

base_model = InceptionV3(weights='imagenet', include_top=False,

input_shape=(16, 112, 112, 3))

# Freeze all layers in the base model

for layer in base_model.layers:

layer.trainable = False

# Add custom classification layers

x = GlobalAveragePooling3D()(base_model.output)

x = Dense(256, activation='relu')(x)

output = Dense(num_classes, activation='softmax')(x)

# Create the fine-tuned model

model = Model(inputs=base_model.input, outputs=output)

# Compile the model

model.compile(optimizer=Adam(lr=0.001), loss='categorical_crossentropy', metrics=['accuracy'])

# Fine-tune on sports action video dataset

history = model.fit(train_generator, epochs=10, validation_data=val_generator)

Benefits: The fine-tuned model can recognize actions accurately, even in videos with varying camera angles and lighting conditions. It aids in sports analysis and coaching.

Career Transition

Wrapping Up

As technologies develop further, the range of possible uses for fine-tuning will keep growing. This will empower businesses to leverage the potential of artificial intelligence and develop creative problem-solving approaches that reshape various industries, improve effectiveness, and bring about revolutionary changes.

To discuss more visit our data science community!

The post What is Fine-Tuning? appeared first on Intellipaat Blog.

Blog: Intellipaat - Blog

Leave a Comment

You must be logged in to post a comment.