Linked Data uptake

Blog: Strategic Structures

Linked Data is a universal approach for naming, shaping, and giving meaning to data, using open standards. It was meant to be the second big information revolution after the world wide web. It was supposed to complement the web of documents with the web of data so that humans and machines can use the Internet as if it is a single database while enjoying the benefits of decentralisation1.

Today on the web we have 1495 linked open datasets, according to the LOD cloud collection. Some among them like Uniprot and Wikidata are really big in volume, usage, and impact. But that number also means that today, 15 years after the advent of Linked Data, LOD datasets are less than 0.005% of all publicly known datasets. And even if we add to that the number the growing amount of structured data encoded as JSON-LD and RDFa in the HTML, the large majority of published data is still not available in a self-descriptive format and is not linked.

That’s in the open web. Inside enterprises, we keep wasting billions attempting to integrate data and pay the accumulated technical debt, only to find ourselves with new creditors. We bridge silos with bridges that turn into new silos, ever more expensive. The use of new technologies makes the new solutions appear different and that helps us forget that similar approaches in the past failed to bring lasting improvement. We keep developing information systems that are not open to changes. Now we build digital twins, still using hyper-local identifiers, so they are more like lifeless dolls.

Linked Enterprise Data can reduce waste and dissolve many of the problems of the mainstream (and new-stream!) approaches by simply creating a self-descriptive enterprise knowledge graph, decoupled from the applications, not relying on them to interpret the data, not having a rigid structure based on historical requirements but open to accommodate whatever comes next.

Yet, Linked Enterprise Data, just like Linked Open Data, is still marginal.

Why is that so? And what can be done about it?

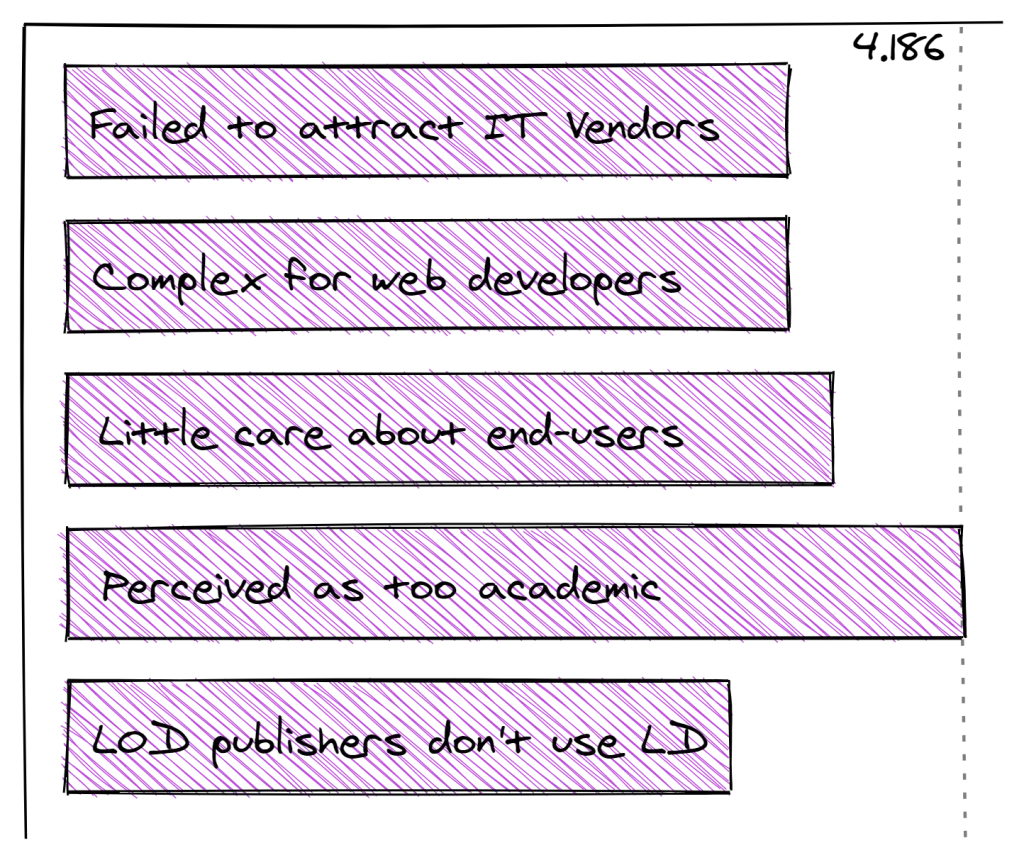

I believe there are five reasons for that. I explained them in my talk at ENDORSE conference the recording of which you’ll find near the end of this article. I was curious how Linked Data professionals will rate them and also what have I missed out on. So I made a small survey. My aim wasn’t to gather a very large sample, but rather to have the opinion of the qualified minority. And indeed most respondents had over 7 years of experience with Linked Data and semantic technologies. Here’s how my findings got ranked from one to five:

Linked Data failed to attract IT vendors. They usually make a profit using proprietary technologies. It’s way more difficult with open standards.

Little attractive tooling is offered to web developers, especially for the front-end. The whole technology stack is on the back-end. Interestingly, these two factors, IT vendors and web developers got finally2 equal average rank of 3.372 out of 5.

Being user-unfriendly got more votes. And the clear winner is that Linked Data, which is closely associated with the well-established research area “Semantic Web”, is perceived as being too academic. This result reminded me of the popular article of Manu Sporny from 2014, JSON-LD and Why I Hate the Semantic Web.

These four reasons are talked about a lot. But in the last decade, I found that there are other issues, hiding where nobody would look for them: the successful Linked Open Data practices. LOD publishers publish Linked Data but don’t use it themselves. They perceive it only as a cost, and what is worst – they neither enjoy the gains nor suffer the pains of using it. And this influences the way they make choices.

I thought this 5th reason is unpopular and won’t get many votes but I was wrong. It got an average of 3.097. That’s not much less than the failure to attracts web developers and IT vendors.

In an attempt to reduce a bit the conformation bias and learn new things, I also asked if there is another reason for the slow adoption of Linked Data and semantic technologies. According to 42% of the respondents, there is. Their reasons varied but their average score was 4.138.

I few clusters formed and the biggest one was around tooling. Tooling is not targeting existing skillsets, not easy to install and unreliable (disappears after a few years), none for the end-users and so on. To me, tooling is closely related to the web developers being attracted. From one side there is insufficient tool support for them, and that leads to fewer developers working with semantic technologies in turn not producing many new tools.

Clusters formed also around missing skillset and being too complex, but they are somehow related to the second and third reason from the hypothesis question. Another cluster was around Linked Data not having clear benefits, business cases being difficult to write and suchlike.

Some comments did not cluster and are worth citing in full

LD gets traction only in a data-centric architecture. As long as senior management doesn’t define a data-centric strategy, the advantages of LD remain difficult to see

What is data-centric architecture and strategy? The principles of the data-centric manifesto give a quick answer:

- Data is a key asset of any organization.

- Data is self-describing and does not rely on an application for interpretation and meaning.

- Data is expressed in open, non-proprietary formats.

- Access to and security of the data is a responsibility of the data layer, and not managed by applications.

- Applications are allowed to visit the data, perform their magic and express the results of their process back into the data layer for all to share.

A far better, yet slower way to understand data-centricity, would be to read The Data-Centric Revolution.

Here are two more comments from the survey:

Linking data in new ways requires people to think in new ways.

Linked Data: 80% of the problems a data manager needs to solve can be fixed without Linked Data. It’s only the last 20% that needs 80% of the time to get it right.

And here is one which I strongly agree with

IT project holders manage theirs projects through KPI, but knowledge management is a transverse discipline and can’t be seen as a single project.

Projects have the ability to create local optima at the expense of the enterprise in both space and time. The concern for enterprise coherence is either not represented at all, represented formally (IT Governance) or not taken seriously (Enterprise Architecture). So a project can achieve its KPIs at the expense of the enterprise-wide benefits right away, or in the future (technical debt). If Linked Data projects are measured the same way, they may perform badly and either be rejected, not repeated or – if it’s a pilot project – not allowed to go to production. The agility, the low cost of future changes and the coherence that Linked Enterprise Data brings, in short, its unique benefits, are also its biggest drawbacks from a project perspective. Unlike most other approaches, Linked Data should be looked at from an enterprise perspective and measured at a programme level, not at a project level.

Here’s another one

Not enough focus on person-centred graphs

This one also resonates with me. Some of you may have guessed that by my blog series on Roam, but my interest in Personal Knowledge Graphs goes wider and deeper than that. Speaking of Roam itself, its nodes have URIs but they are not persistent across graphs; Roam lacks explicit semantics, doesn’t support ontology import or matching and so on, but until it gets there or something else offers what’s missing, I’m using a few workarounds which I’ll share in Part 5 of the series. The important point is that our need to organize our own knowledge, to link items of and within tasks, projects, presentations, persons, articles, books, hobbies, videos, iweb pages, thoughts, ideas, writings and so on so that new associations and ideas emerge when we interact with our PKGs, is strong and growing and such a personal experience with knowledge graphs can also awaken the interest in semantics, once the size of our graphs and the appetite to do more things with them grows.

One particular response caught my interest. It’s an outlier in more than one dimensions, and points to very important aspects not caught in any of the reasons, neither my finding nor the additional ones. It deserves to be cited entirely:

1) Basically, people don’t care about sharing data in proper ways, ie, in standardised forms. In some cases or niches, yes, when dominant players have interest in it (eg, Google), yes. But, in general, they don’t see the need to integrate data if the main benefits of doing it are more for third parties than for themselves. Even worse, if they see open and standardised data as a threat to their competitive advantage. Sadly, there are even cases where “standardisation” for a company is actually the attempt to dominate the market with their own solutions (eg, Google and schema.org).

2) Many things on the Semantic web were conceived in deeply wrong ways. Eg, OWL is incredibly complex and the degree of formal commitment it requires is very incompatible with what you can practically obtain when you try to integrate data spread around the entire web.

3) RDF is missing fundamental features that are very important for daily work. Eg, RDF-Star came too late, we would need it years ago, now they’are all adopting property graphs.

That says a lot. Yet, if the author3 happens to read this article, feel free to add and elaborate if needed.

Now, if Linked Data has unleashed potential, what can we do to change that?

You’ll find some suggestion in my talk at the ENDORSE conference. Please take that as an invitation to comment and add yours. You can view and download the slide-deck here.

Yet, it’s worth noting that the picture this diagnosis draws changed significantly in the last couple of years. Maybe be the turning point was 2017, when SHACL, RDF-star, and Neptune appeared, as well as the first working algorithms to translate natural language questions into SPARQL.

Yes, vendors are still only a few and they are small but this is changing quickly. Amazon came up with Neptune, a hybrid LPD/RDF graph service. Another tech giant, Google, although not offering yet an RDF service, stimulated the spread of “surface-level” Linked Data. The recommendation of Google to encode structured data using JSON-LD and RDFa boosted the growth of structured data being encoded within HTML with schema.org. By 2020 there were 86 billion triples in the crawled web pages by the Common Web Crawl, and in terms of format, JSON-LD grew from 8.4 billion to 32 billion triples. Although the actual growth is probably a bit smaller, having in mind that the number of crawled web pages in 2020 was also bigger than in 2019, the relative growth in comparison with Microdata is quite telling. The Microdata triples grew much less, from 22 to 26 billion.

And yes, web developers still don’t speak RDF but now the number of JavaScript libraries for RDF is significant and new languages like LDflex came to make it easier for them to work with RDF. And since web developers are more comfortable with GraphQL than SPARQL, they can now query RDF also with GraphQL.

SPARQL is easier than most people think, and yet it still represents a barrier for them to explore and exploit the growing linked knowledge on the web. But this is changing as well. Now there are ways to query SPARQL endpoints with questions asked in natural language (QAnswer, FREyA).

Overall, I believe that Linked Data, supported by the current or by new semantic technologies, is inevitable. In the worst-case scenario, fulfilling the tongue-in-cheek prophecy from my talk, Linked Data will be mainstream in 2047.

Related posts

Wikipedia “Knows” more than it “Tells”

Footnotes