Why CIOs Should Consider Artificial Intelligence To Manage Their IT Portfolio

Blog: Capgemini CTO Blog

A typical global enterprise has several thousand IT assets deployed in its network, which by their very nature, are complex, varied, and difficult to inventorize and therefore manage. It is vital to understand the assets in the IT landscape in order to improve an IT department’s performance. How can we plan to reduce maintenance costs if we cannot control the number of net new applications created each year? Or, how can we migrate to the cloud if we cannot map the servers with the applications they support?

Against this backdrop, we have, over the last seven years, been helping our clients take control of their IT assets and working with them to build their progress plans. This is possible thanks to an accelerated and industrialized approach called eAPM (economic Application Portfolio Management), which, in a time span of six to eight weeks, helps clients create an action plan to work on areas including rationalizing applications and infrastructures, Go To Cloud, optimizing the operating model, sourcing strategy, and digital transformation.

Our approach is powered by an advanced decision-making assistance solution—LinksITP—which is accessible in SaaS mode and is proprietary to Capgemini. It is built with the latest technologies and approaches including Cloud, DevOps, Digital, Analytics, and just recently, with Artificial Intelligence.

We felt that it would be interesting to share our experiences with our recent approach to Artificial Intelligence.

What are the problems a CIO faces while analyzing IT assets?

IT assets are difficult to analyze, even if we have all the data we need and the decision-making assistance tools to correlate, combine, or interpret the data. CMDB (Configuration Management DataBase), application inventories, ticket base, list of suppliers, project portfolio, cost elements, process catalogue, and so on—several tens of thousands of data points are necessary to analyze a portfolio. Given the volume, a CIO cannot physically “see” everything unless he spends an infinite amount of time on it. And, even if he can set up a diagnostic, it is difficult to build the action plan in an ad-hoc fashion and to quantify the expected benefits.

Though many asset management tools (APM) in the market address multiple objectives, there are limitations in their ability to guide a CIO with the most relevant analysis axes. Calling on an expert is vital. However, there are very few specialists who can identify the weak signals, interpret trends, and roll them out as actions.

Why Artificial Intelligence?

Developing reasoning capacities with a traditional algorithm is very difficult and has its own limitations. The idea was to take advantage of Artificial Intelligence (AI) techniques to create an engine that will act as a virtual expert specifically trained to analyze IT assets. This AI engine will be able to correlate, compare, and interpret huge volumes of data. In addition, the engine is trained to present the results, explain them, and accompany the CIO in his or her search for better performance.

Over the few months, we invested in R&D of a solution where we designed, developed, and trained the virtual expert. This virtual expert is currently available to all our clients.

What was our approach? We asked ourselves:

• What should be the result?

• What should be the input?

• Should we design a self-learning system?

• How should we model and collect experts’ knowledge continuously?

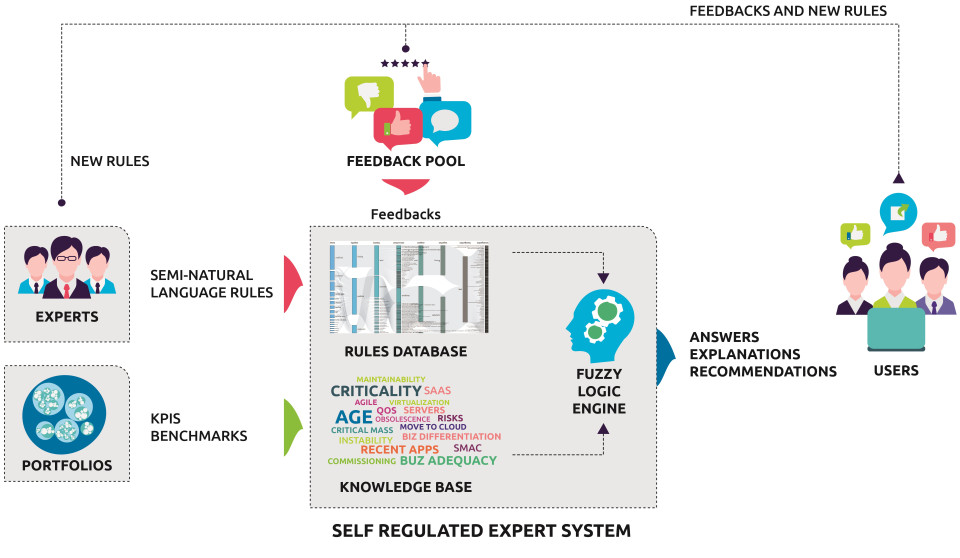

These questions quickly led us to design an Expert System (ES) based on an inference engine and rules base.

Inference engine is the brain of the virtual expert

This engine has a fuzzy-logic capability that enables it to reason on partial or any missing data and respond to questions affirmatively or negatively or potentially with an “I don’t know” and explain the reasons for its findings. We also enabled the declaration of rules and the textual presentation of results in the most natural and usual way possible for users. In addition, we augmented the self-regulating and learning capability of the engine. This capability enables the engine to adapt the relevance of results based on the validation by the users. It also enables the user community to add new rules to its user-friendly, intuitive graphic interface.

While the inference engine is the virtual expert’s brain, the rules base is its grey matter.

Two types of profile had to work together to create it:

• Expert System, which provides the information system diagnostic assistance

• Cognitive engineer, which translates the reasoning used by the Expert System into rules to analyze a situation and develop an action plan.

We developed a list of 300 rules that cover all the analysis filters possible. Each rule leads to one or more observations that are the result of several sub-observations.

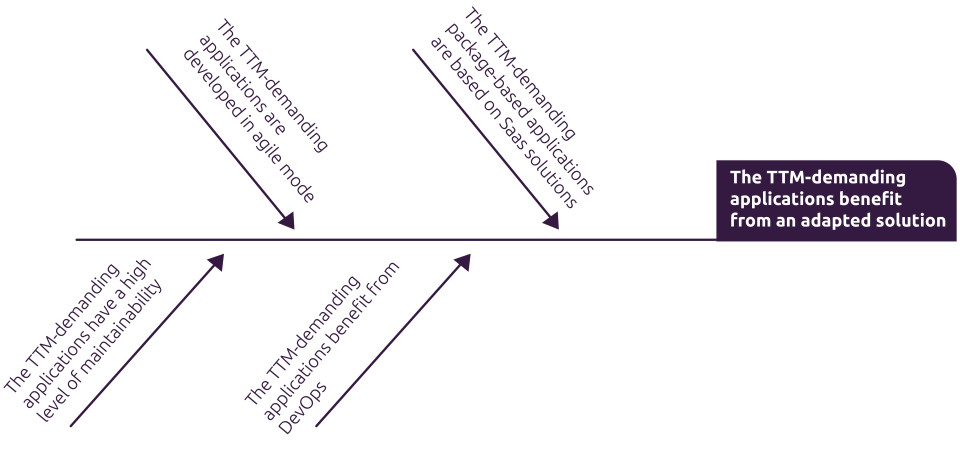

Example of sub-observations and the resulting observation

To describe these rules, we developed a format close to natural language. This is an asset of the solution we developed that will enable the base’s facilities to be updated and enhanced in the future.

The benchmark base contained in Links ITP (over 7 million measurement points) is fully used by the rules engine. The number of rules compares the data relative to the context analyzed with the reference data in the benchmarks to generate an observation. These rules were tested for relevance in different contexts. These tests were carried out by comparing the result produced by the Links ITP virtual expert against the result produced by the human expert during previous diagnostics. The results exceeded expectations by providing extremely relevant output. The engine with its self-learning capability provided additional observations that the human expert missed. These additional insights are due to the systematic activation of all the analysis filters by the engine.

The general operating scheme is as follows:

Before: an eAPM analysis involved analyzing a portfolio of nearly 40 different areas. This required an average of two days full time for an expert with all the data and resources.

After: with the AI engine leveraging Robotic Process Automation (RPA), the analysis result appears in seconds, highlighting the prominent observations and associated recommendations by using the complete rules base.

We achieved the mission by training a true virtual expert powered by RPA that is always available to perform exhaustive and complex analysis in record time. It can analyze tens of KPIs calculated from tens of thousands of items of raw data by activating 300 knowledge-base rules in a few seconds!

AI is key to our future development. It enables top-down harmonization of analysis quality and gives our team scalability to the product and reduced effort from the “human” experts who accompany them.

Leave a Comment

You must be logged in to post a comment.