Motivated Reasoning and Validating Hypotheses

Blog: Tyner Blain

In our continuing series on managing the risk in your backlog, we look at the risk of kidding ourselves. Specifically, we use cause and effect and hypotheses to identify the assumptions in our plans, but if we don’t do it the right way, we will lie to ourselves by validating our assumptions instead of responding to the truth when we see it.

Steps in a Journey to Managing Risk

In the previous article on Cause & Effect and Product Risk we looked at the first problem – risks are hidden in our roadmap / backlog / plan. We also looked at how to address this problem – leveraging impact mapping and hypothesis formulation to expose those risks.

In this article we will take the next steps on our journey to harnessing feedback to address those risks. A lot of writing about feedback from users just says “get feedback” and well, that’s not bad advice but it is incomplete advice.

The advice to just “get feedback” is not enough because of the cognitive bias motivated reasoning. We are wired to look for data to reinforce what we already believe, instead of looking for truth. How we get feedback matters almost as much as deciding to get it.

We have to define what we’re looking to see, before we start looking. This decision impacts how we write our hypotheses.

Understanding Our Hypotheses

In Lean UX, Jeff Gothelf references a couple of first-principles of hypothesis writing, including identifying two key elements of any good hypothesis.

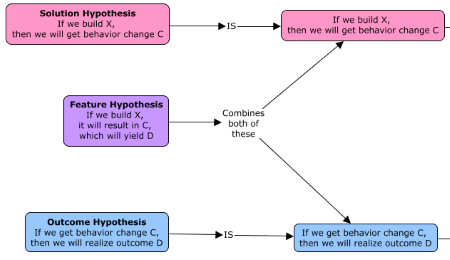

The first key element is

making your assumption explicit: we believe if X, then Y.

In

the previous article on product risk, we looked at two versions of of making

assumptions explicit –

- Solution hypothesis – if we build X, then we

will see behavior change C - Outcome hypothesis – if we have behavior

change C, we will realize outcome D

Lean UX describes a

feature-hypotheses format which takes a more design-centric approach to help

teams focus on which features to build

- A feature hypothesis – if we

build X, it will result in C which will yield D

As

this decomposition shows, the feature hypothesis is a combination of both the

solution hypothesis and the outcome hypothesis into a single compound

statement.

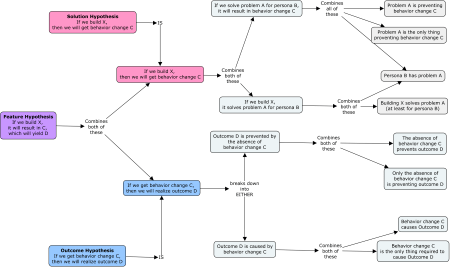

It

is helpful to build out a hypothesis tree and identify all of the constituent

components of the total hypothesis.

I

believe the combined form, the feature hypothesis, may be best for organizations

who have been executing lean processes for defining, deconstructing and

validating hypotheses into their workflow for some time. These teams will not lose sight of the

multiple distinct assumptions and may benefit from managing a single hypotheses

as the aggregation of assumptions.

I often work with teams which have not been explicitly managing the risk in their plans. They are learning how to engage stakeholders to acknowledge that plans even have manageable risks in them. In these environments, I am usually helping teams escape “feature factory” dynamics where stakeholders are just asking them to build stuff. These teams are focusing on changing the nature of their conversations to be oriented around outcomes, not outputs.

There is a benefit from working with two distinct hypotheses types – solution hypotheses and outcome hypotheses. It simplifies decision making while gradually changing the nature of collaboration with other people throughout the organization.

The second key element is to identify what you’re looking for to prove or disprove your hypothesis.

Making Hypotheses Disprovable

Forming

hypotheses exposes the latent risk in our plans – which highlights

opportunities for research, and which informs our investment decisions. When we decide to reduce the risk in our plan

(through research) we have to define what we need to see to know if our

assumption was valid. We need to make our hypotheses testable.

A couple years ago, Roger Cauvin introduced me to Karl Popper and falsificationism.

Noun. falsificationism (uncountable) (epistemology) A scientific philosophy based on the requirement that hypotheses must be falsifiable in order to be scientific; if a claim is not able to be refuted it is not a scientific claim.

Looking

at a solution hypothesis, we have the following:

- We believe if we build X

(which we assume solves problem A for persona B) - Then we will see behavior

change C - We will know we are right

when we see Y

This explicit call-out “we will know we are right when” is what we write to make the hypothesis testable. As Roger points out in his article, if we don’t write this in a way which is falsifiable, we aren’t writing it in a way which makes the hypothesis provable. We would only be creating the risk-management equivalent of vanity metrics. Our research would make us feel good without actually improving our plan.

In

addition to making our hypothesis testable, documenting what we need to see

before we try to see it is the antidote for motivated

reasoning poison.

Motivated Reasoning

Like a lamp-post to a drunk, we are inclined to use data more for support than for illumination.

In Thinking in Bets, Annie Duke unpacks a powerful interpretation of the cognitive psychology research. In a nutshell, we want to feel good about ourselves and about our previous decisions. We feel bad when we find out we’re wrong, and naturally we want to avoid this feeling. So we look for information to prove we were right all along.

We

are, in fact, motivated to interpret

the data we acquire as feedback proving our correctness. Hence, motivated

reasoning.

What

we need is an impartial third party to objectively evaluate our results and

determine if they prove or disprove our hypothesis. We cannot rely on ourselves to do it.

Annie Duke introduces a delightfully clever mental-model for avoiding motivated reasoning, and we can use it to super-charge our hypotheses. She suggests we treat present self and future self as two different people. Future self is subject to motivated reasoning, and therefore cannot be trusted to evaluate the results of testing a hypothesis.

Gathering feedback, when done well, is done in service of testing a hypothesis. Future self is the only person who can gather the feedback. What we do is remove future self’s ability to interpret the feedback. Present-self will predetermine how any information will be interpreted.

This

is where the third statement in our hypothesis structure becomes very powerful.

Revisiting

the solution hypothesis, we now have the following:

- We believe if we build X

(which we assume solves problem A for persona B) - Then we will see behavior

change C - We will know we are right when we see Y

When we see is the interpretation. We turn future-self into an objective assessor of truth. Did we see Y? No? Hypothesis disproved. Yes? Hypothesis proved.

So,

the onus is on us to properly define Y, to make sure the hypothesis is

falsifiable. Essentially, to ask the

right question.

(Interim) Conclusion

So far, we have taken a couple steps in the journey to improve our product plans. We’ve done it in an iterative and incremental way.

First – document cause and effect relationships. This is directly valuable, enabling us to abandon the feature-factory.

Second – acknowledge the implicit risks in our plans and formalize hypotheses. This is also directly valuable, enabling us to address those risks.

Next – we will look at what we need to do to improve our ability to address the risks.

The post Motivated Reasoning and Validating Hypotheses first appeared on Tyner Blain.

Leave a Comment

You must be logged in to post a comment.