Linear Regression in DMN

Blog: Home on Method And Style

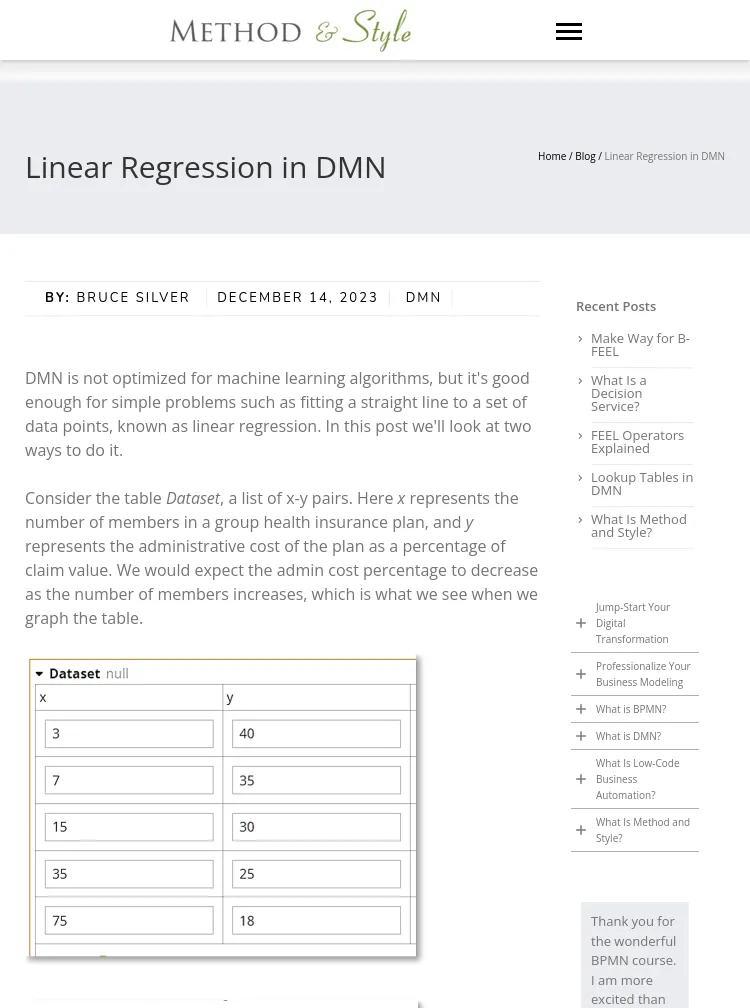

DMN is not optimized for machine learning algorithms, but it's good enough for simple problems such as fitting a straight line to a set of data points, known as linear regression. In this post we'll look at two ways to do it. Consider the table Dataset, a list of x-y pairs. Here x represents the number of members in a group health insurance plan, and y represents the administrative cost of the plan as a percentage of claim value.