Flowable Business Processing from Kafka Events

Blog: Flowable Blog

Last month we introduced the Flowable Event Registry. We showed how you can use events in your processes, how you can model them with the registry and how to use it with the Flowable Task Application.

Now we are going one step further by creating a more complex scenario, having multiple micro-services communicating between each other. Some of these micro-services are other Flowable Applications and some are non Flowable applications. This an update of our Devoxx 2019 talk (watch the video) with the same title.

If you are not yet familiar with the Flowable Event Registry, we highly recommend reading the Introducing the Flowable Event Registry blog first before continuing. The entire code and examples for this blog post can be found in the flowable-kafka example.

Let’s begin with our use case. We have a fictional electric kick-scooter startup whose number one priority is customer satisfaction. We are going to build a software solution where users can rate how satisfied they were with the ride they had. Once the scooters have been used and are returned, the customer is able to provide a review via our website. This review is then sent to a Kafka topic via a simple Reactive Application. In our main customer application we have a CMMN case for each customer. This allows us to group all reviews for a particular customer, kick off a sentiment analysis process (which is another independent application that uses AWS Comprehend to do the analysis). If we receive a bad review we start a task for one of the employees so they can remedy the relationship with the customer.

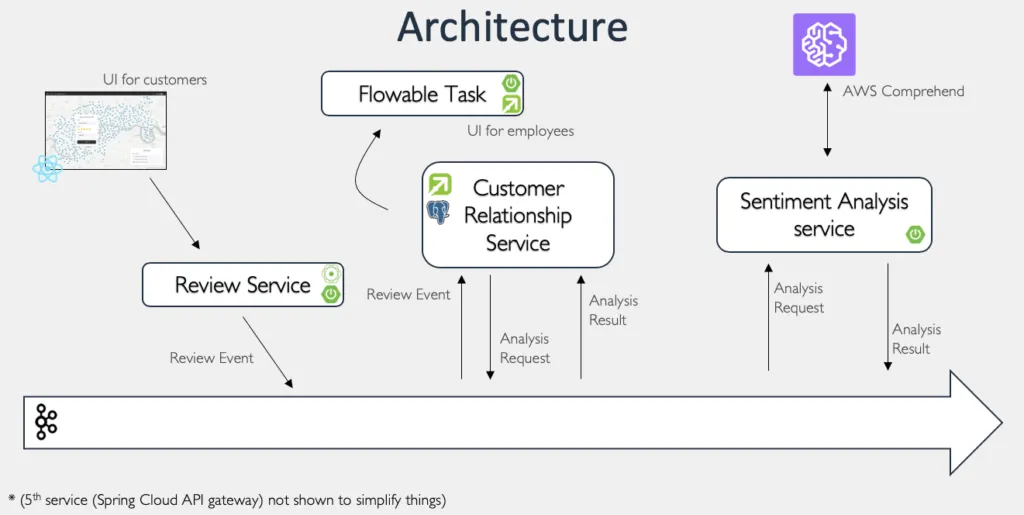

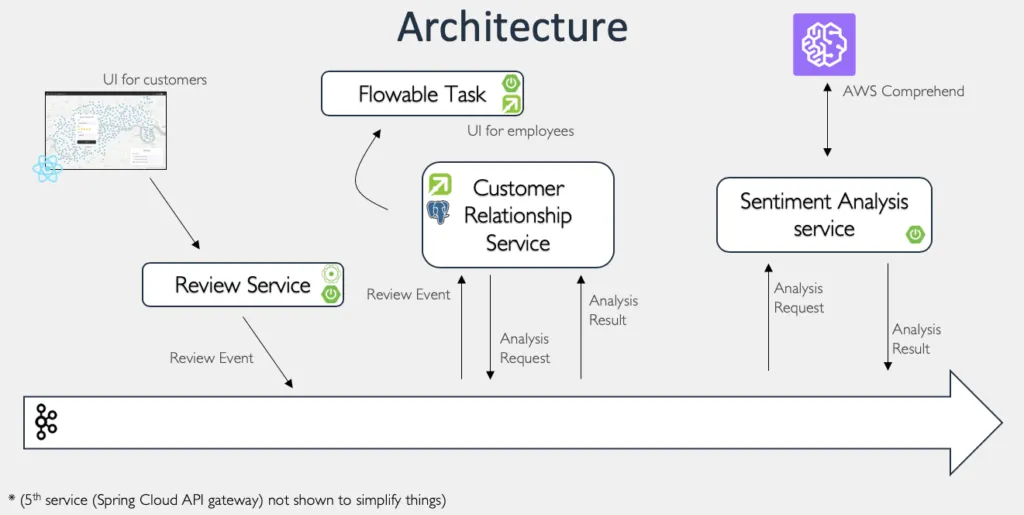

The architecture of our system looks like this:

At the bottom we have a Kafka Event Stream which is transporting all the events. Then there is a React UI for our customers, which is submitting the events to our review service.

- Review Service – Reactive Spring Boot application that uses Spring WebFlux to receive the reviews from our UI and transmits them to Kafka.

- Customer Relationship Service – the main application, this is a Flowable Application where our customer case will live, along with the processes for the customer, and so on. It is a Spring Boot application that uses Postgres as a database. It will receive the Review and Analysis Result events and send out an Analysis request.

- Sentiment Analysis Service – a Flowable Spring Boot application which uses a process for performing the sentiment analysis.

- Flowable Task Application – connected to the same database as the Customer Relationship Service where the employees can interact with the Customer case.

- Spring Cloud API Gateway – for redirecting the traffic from the UI to the appropriate micro-service.

Review Service

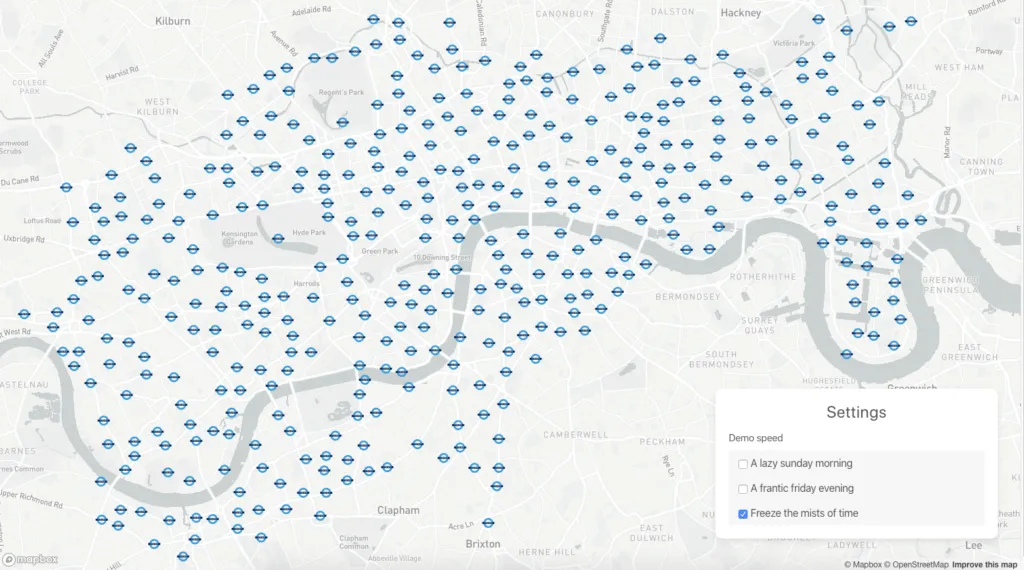

The review service is a simple Spring WebFlux application that receives the reviews from the customer from our custom React UI. The application displays a map from the Open Data of the London Santander Bike system. Every point on the map is a point where the customer can drop off our kick scooters and leave a review by clicking on it and filling in a form.

The review has the following structure:

{

"userId": "John Doe",

"stationId": 5,

"rating": 5,

"comment": "Really great service"

}This review is then sent to the reviews Kafka topic.

Customer Relationship Service

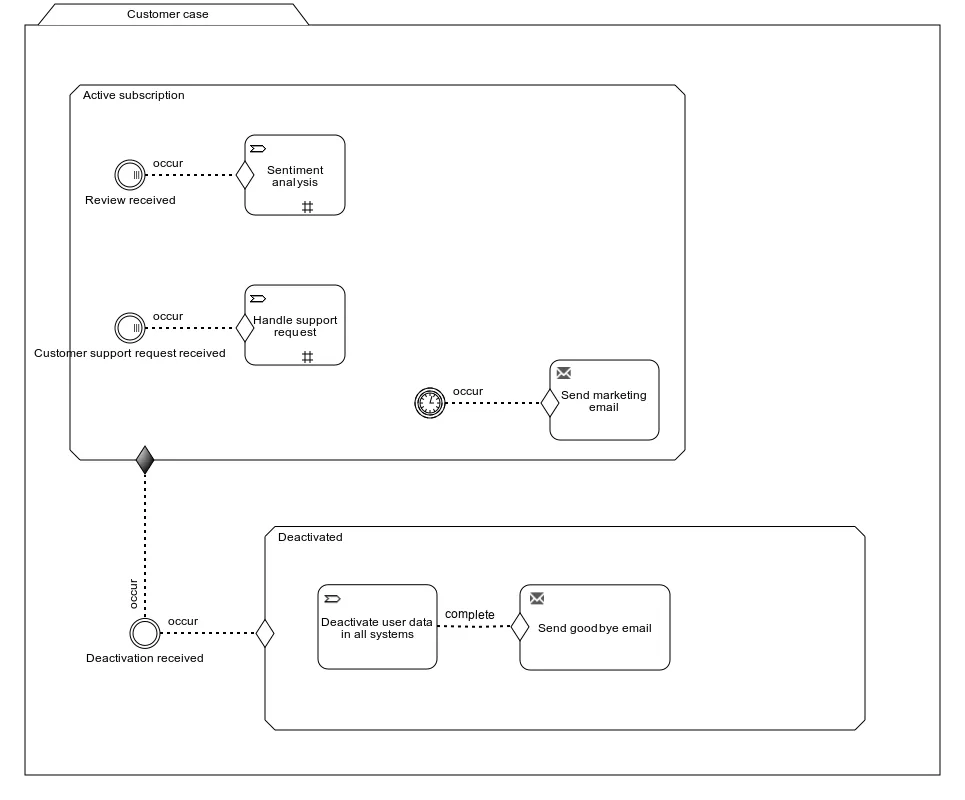

The customer relationship service is a Flowable Spring Boot application where the Customer case is created. The model for the customer case looks like this:

Whenever a new customer is signed up we create a case. This case will start in the “Active subscription” stage and wait for the possible events that are relevant:

- Review received

- Customer support request received

- Timer event

- Deactivation received

For our demo, we have only implemented the “Review received” event, the others are there for the case to make more sense. This event listener has been configured to listen to the “Review Event”.

Before we show how to configure the “Review received” event, let’s model the “Review Event” that is sent by our Review Service.

In the screenshots below, we’ll be using enterprise Flowable Design 3.6.0 for convenience (which, at the time of writing isn’t released yet, but soon will; if you’re interested in trying this yourself, have a look at the Flowable trial page). You can do all of this with the open source Modeler app and config.

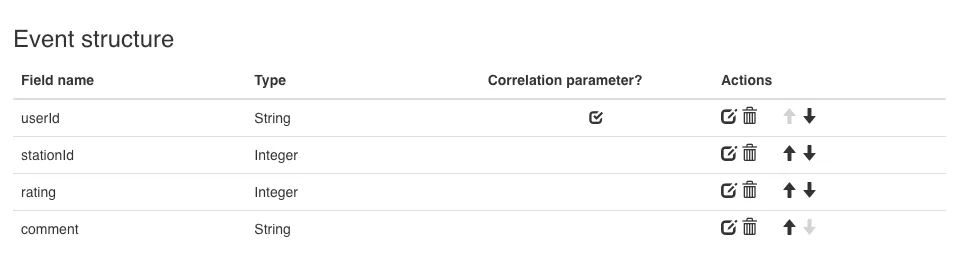

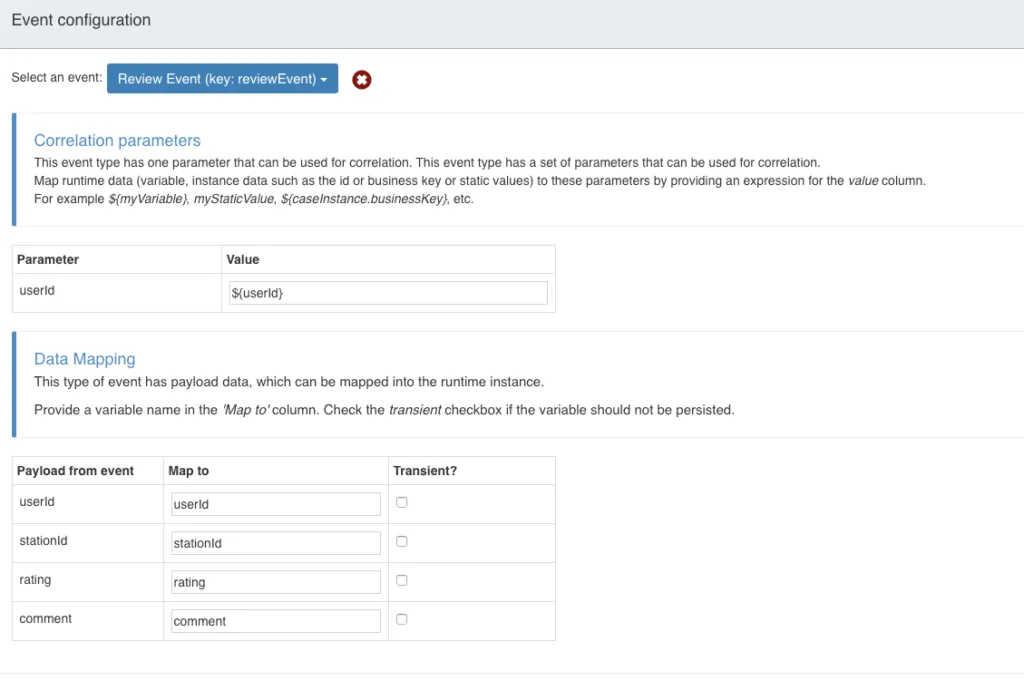

The event (as we saw in the JSON before) has a userId that is used as a correlation parameter, the stationId, the rating and the comment. Once we have this event we can configure the “Review received” event in the following way:

Let’s go over this configuration to understand what is happening. We need to define the correlation parameter for the event. As this case instance is for our customer with userId, we only want to activate it if the userId from the event matches the one form the case. Therefore, the correlation parameter needed is ${userId}. If we left this empty then it would have been triggered for all review events. Once we have the correlation parameters configured, we need to configure the data mapping. We simply copy the parameters from the event payload into the case instance here.

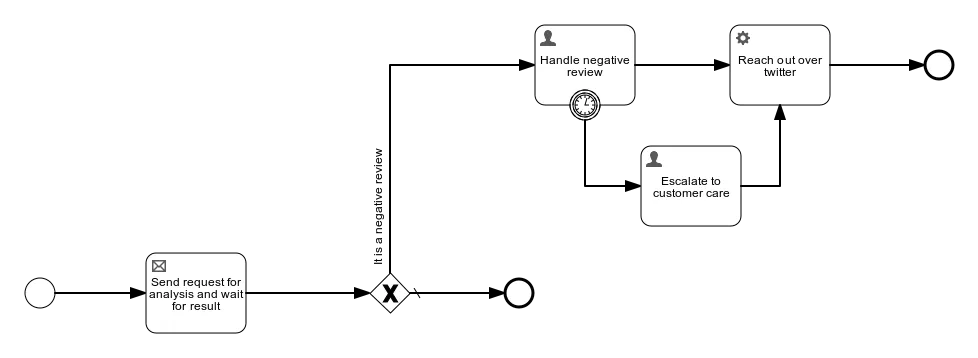

Great, so we now have our event configured, when the event is received it will trigger the Sentiment Analysis process task and pass the userId and comment as input parameters to it. Let’s have a look at that model:

As we mentioned earlier, the actual Semantic Analysis is happening in the independent Sentiment Analysis Service. Therefore, we have the “Send request for analysis and wait for result”. Let’s define the “Sentiment Analysis” and “Sentiment Analysis Result” events

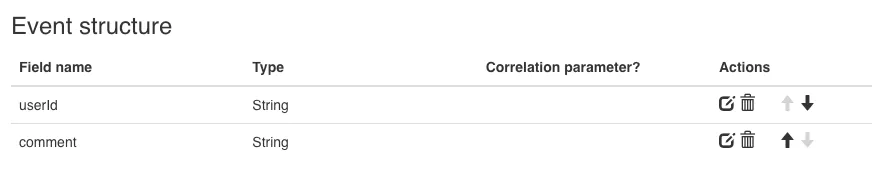

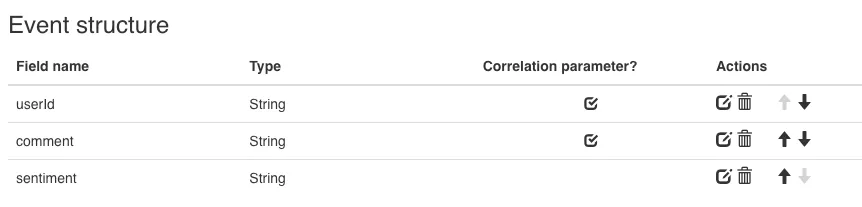

The Sentiment Analysis Event has the userId and comment as its event payload, and the Sentiment Analysis Result event has the userId and comment as its payload and correlation parameters with the sentiment as the result of the sentiment analysis.

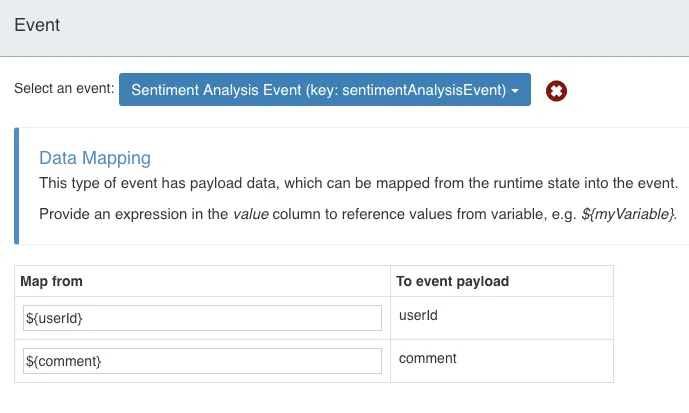

Let’s see how we can configure our task now

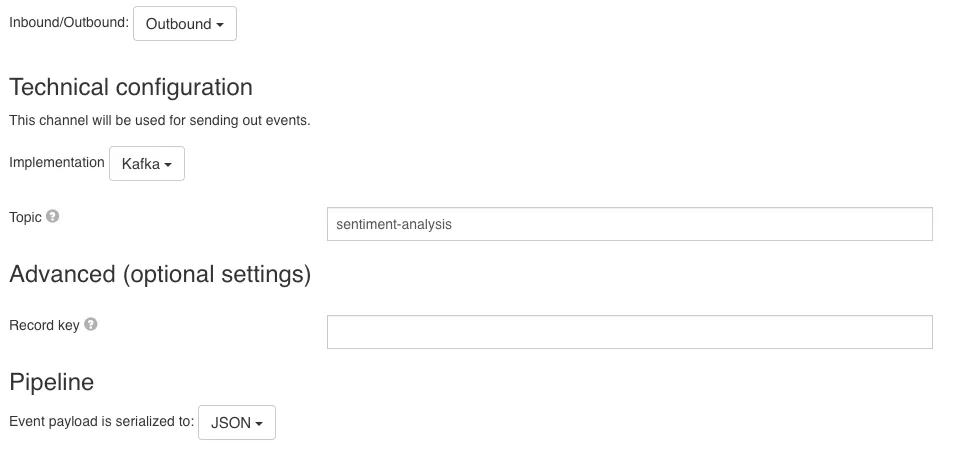

The event that we are sending is the “Sentiment Analysis Event” and we map the userId and comment from the process into the event payload. For an outbound event we also need to configure the channel that should be used to send the event. For this we are going to use the “Sentiment Analysis Outbound Channel” that is configured in the following way:

This is rather simple: we will be using Kafka as an implementation, sentiment-analysis as the Kafka topic to send to, and we would serialize the Event payload to JSON.

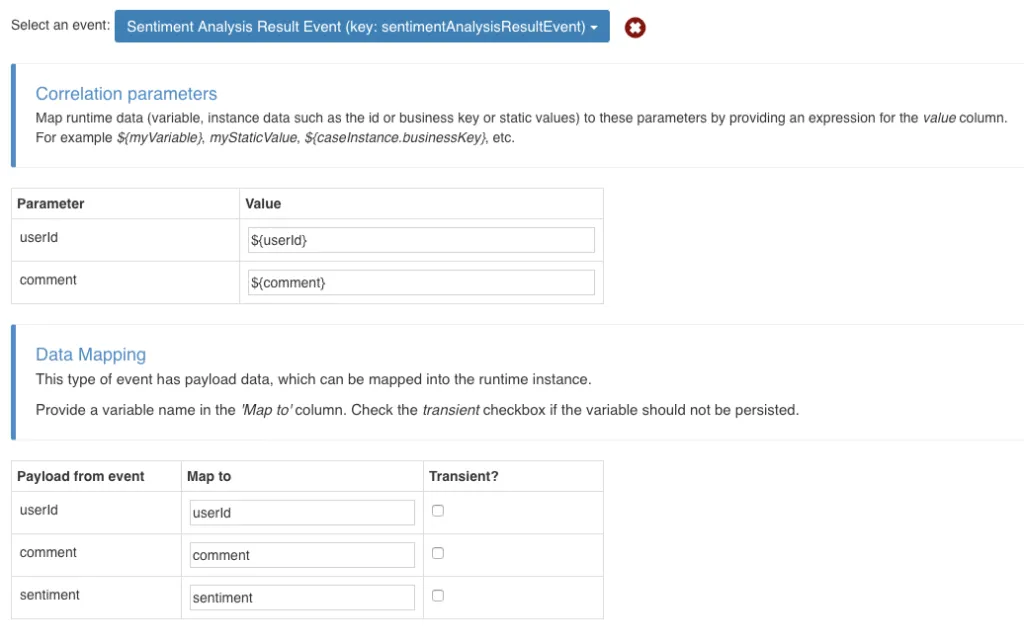

That was for the outbound, let’s configure the inbound event (the “Sentiment Analysis Result” event):

We want our send and receive task to continue once we receive the sentiment result for the given userId and comment, therefore we use those as correlation parameters. In the data mapping, we extract the sentiment from the event payload. Then, based on whether the sentiment is positive or negative, we can take different actions for the process.

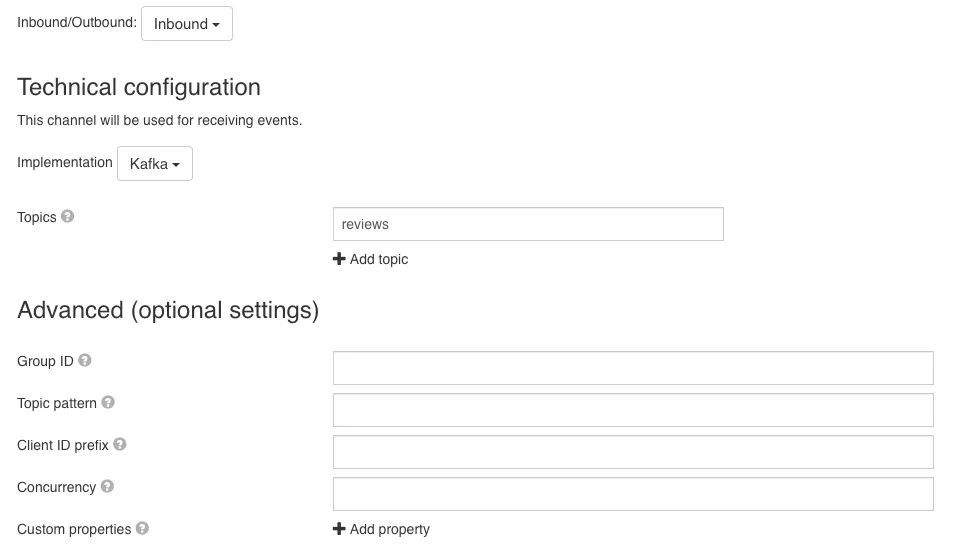

Up to now we have configured our case and process to listen to the appropriate events and send the data with a particular event. You are probably wondering, all this is good, but how are these events received, what do I need to do that? That is a good question. In order to receive these events we need to model our Inbound Channels:

- Review Inbound Channel

- Sentiment Analysis Result Inbound Channel

Let’s see how these configurations look like:

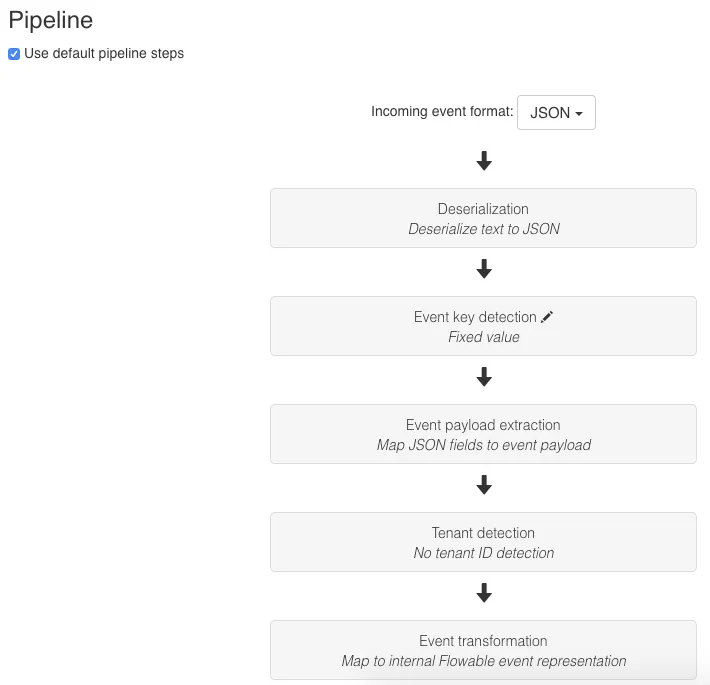

As you can see, this is similar to the Outbound channel. We will be using Kafka as an implementation, and listening to the reviews Kafka topic. We can also configure more advanced Kafka Consumer properties. Apart from these properties, we also need to configure the pipeline (how the received event should be transformed):

As we saw at the beginning, the review event is JSON, so our incoming event format is JSON. We use a fixed value (reviewEvent) for detecting the event key from the event; all the JSON fields are extracted to the event payload, there is no tenant id detection, and we will use the default internal event transformation:

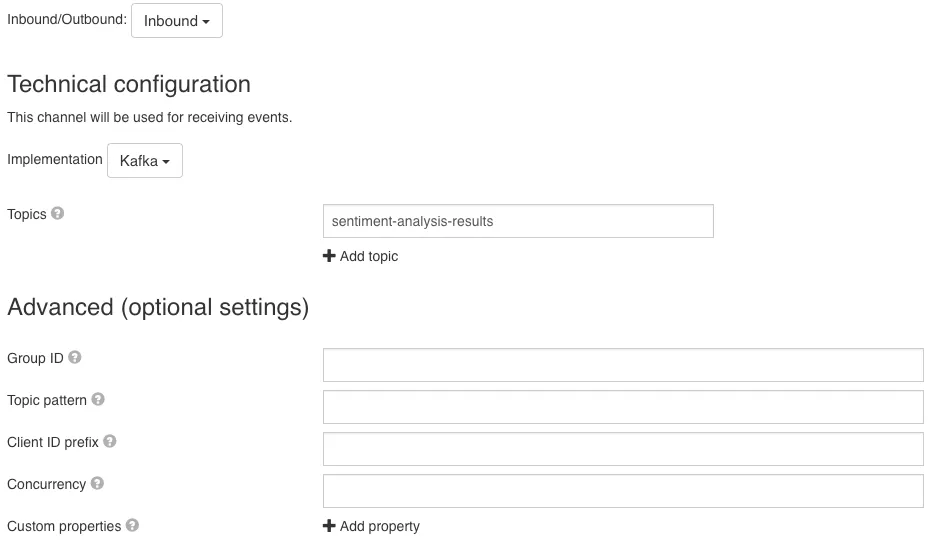

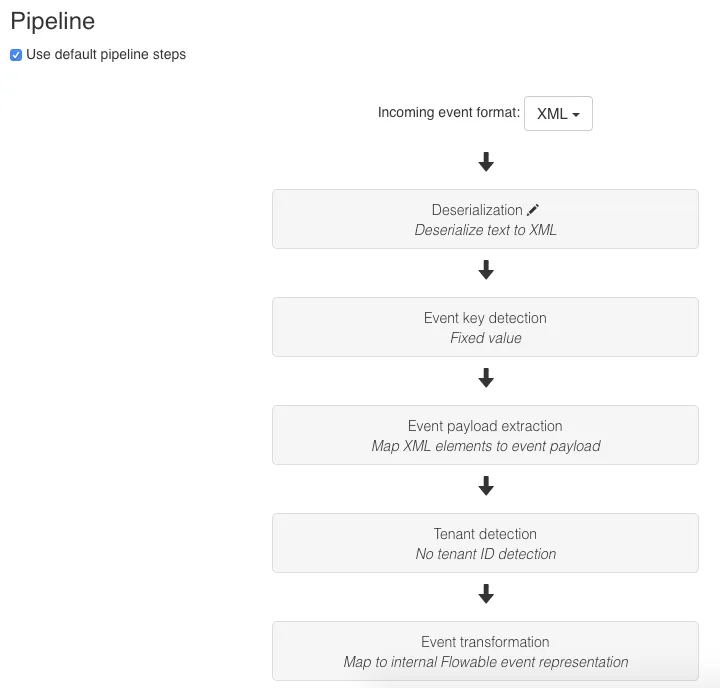

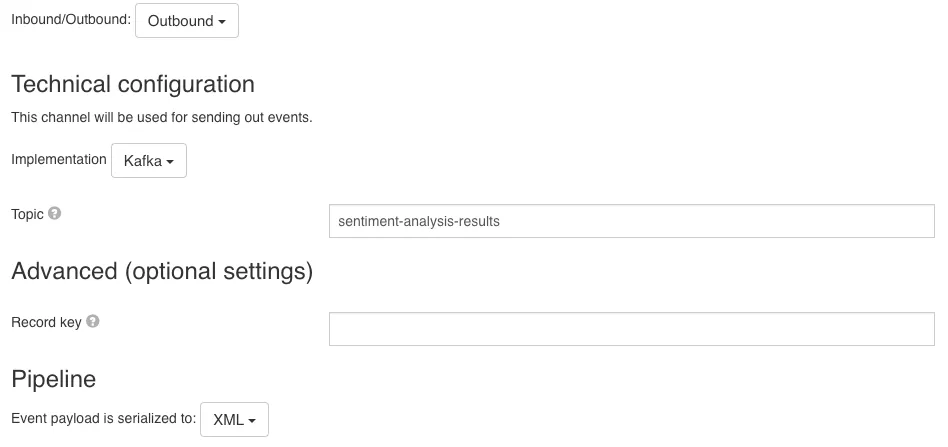

The Sentiment Analysis Result inbound channel also uses Kafka and listens to the sentiment-analysis-results topic. We haven’t yet seen what the sentiment analysis result event payload looks like. Let’s make this a bit more complex and use XML instead of JSON, so our results look like:

<sentimentAnalysisResult>

<userId>John Doe</userId>

<comment>Really great service</comment>

<sentiment>positive</sentiment>

</sentimentAnalysisResult>And our pipeline looks like:

We will now use XML for the deserialisation; fixed value for the event key detection (sentimentAnalysisResult); map the XML elements to the event payload; no tenant ID detection; and the default mapping to the internal Flowable event representation.

Once we deploy these models to the Flowable Customer Relationship Service, the two inbound channels will create Kafka Consumers to listen to the given topics. Whenever an event is received, the appropriate case / process will get notified.

Note that if we redeploy these channel and event definition, Flowable will make sure the relevant listeners are removed and new ones are created.

Sentiment Analysis Service

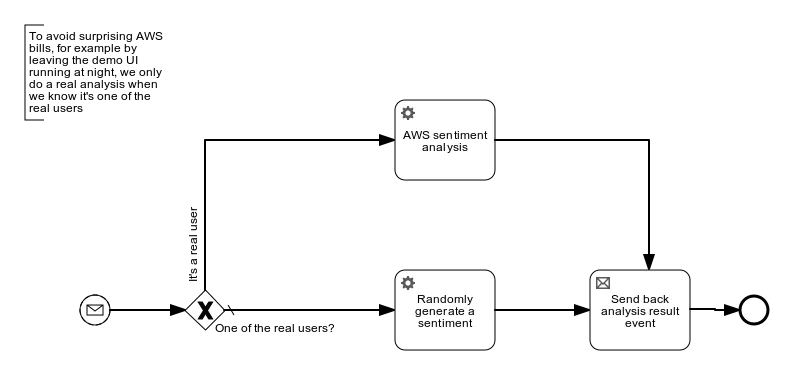

This service is also a Flowable Spring Boot application, it is completely separate from the Customer Relationship Service and has its own independent database. It has the following process model:

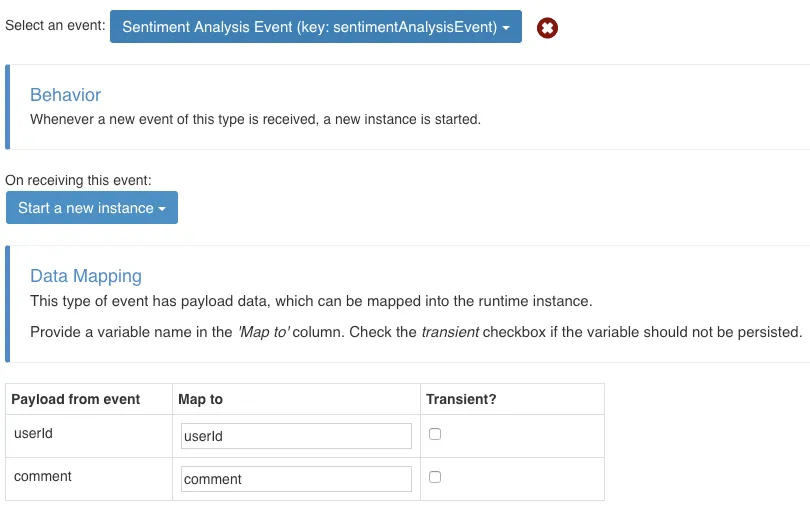

It uses an event registry start event listening to the “Sentiment Analysis Event” with the following configuration:

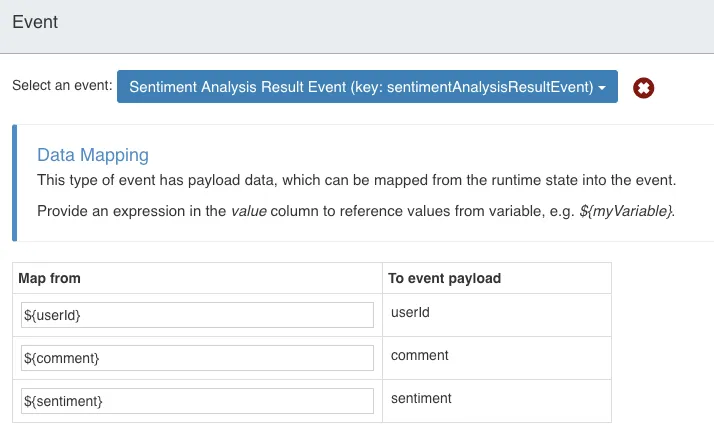

Whenever an event is received, we want to start a new process instance and map the event payload to the Process userId and comment variables. This then is sent to AWS Comprehend, after which the results are sent with the “Send back analysis result event” task over the “Sentiment Analysis Result Outbound Channel”:

Takeaway

In this article we’ve shown how easy it is to build a non-trivial architecture with multiple micro-services using Flowable and using Kafka to transport events between those services. We’ve demonstrated how Flowable can be used to easily hook into Kafka events and how Flowable helps to build up the context in which to apply the events. Also part of this example used Spring Boot + Flowable applications that use process and case models which are exposed as a microservice.

All of the above can be found in our examples repository. Let us know if you have any feedback in our forum and as usual, we accept PR’s on this repository too ;-)!