Down the rabbit-hole with ‘evidence-based’

Blog: Tom Graves / Tetradian

If we want to make something ‘evidence-based’, then what exactly is ‘evidence’?

On the surface the answer might seem obvious – I mean, evidence is evidence, right? But in practice, as soon as we look a little closer, the evidence for evidence as evidence starts to look a lot less like evidence than at first seemed so much in evidence. Yes – it’s that kind of rabbit-hole…

Where this started was a Twitter-argument a couple of days back between two people I know in the KM (knowledge-management) space. For simplicity, I’ll call them A and B: I won’t name or quote either of them, because it’s not that relevant as such for here, and I want to avoid getting tangled in the argument itself. To me, the key parts of the argument that are relevant for this were:

- A claimed that B’s proposed course on KM could not possibly be valid because its methods were purportedly not ‘evidence-based’ in the sense that A would prefer

- B asserted that methods themselves were as much problematic (and hence necessarily subject to ‘evidence-based’ review) as the evidence assessed in those methods

It’s that latter point in particular that takes us straight down the rabbit-hole.

So, first question: what exactly is ‘evidence’? The literal meaning is that evidence is ‘that which is seen’ (or ‘seen outwardly‘ – a another point we’ll return to in a moment). In short, if we can see it, it’s evidence. Simple, right?

Uhh… – no. Not simple at all. Straight away we hit up against Gooch’s Paradox: ‘things not only have to be seen to be believed, but also have to be believed to be seen’. If we don’t take real care in facing up to that paradox, we’ll fall into any number of the myriad forms of cognitive bias. At the very least, we need to beware of falling into the circular-reasoning trap of ‘‘policy-based evidence‘.

Next – and this came up in a key part of that Twitter-argument – we need to note that every viewpoint is inherently problematic, in the sense that each provides its own constraints on what can and cannot be seen, and hence is necessarily subject to assessment and review. To me one of the classic examples of this in enterprise-architecture is the bewretched ‘BDAT-stack‘. In a more general way, the problem here is highlighted by the Penrose Triangle – which, in a three-dimensional real-world, can only be made to seem to ‘make sense’ if we look at it from exactly one amongst an infinity of possible viewpoints:

Which also means that peer-review is problematic too. In the sciences especially, peer-review is supposed to be the key mechanism to filter out uncertainties or outright errors – but what happens if the peer-group all share the same viewpoints, the same assumptions? How do we filter out cognitive-bias, when the peer-group already share the same cognitive-bias? Tricky…

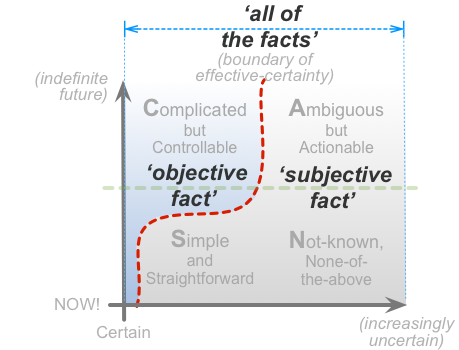

The next challenge comes from the term ‘evidence’ itself – that ‘e-‘ (‘ex-‘) prefix, meaning ‘outward’, that indicates that the term relates to ‘that which is outwardly seen’ – which most people seem to take to mean ‘evidence = objective-fact’. Which might seem fair enough, at first glance: but to fully understand any given context, what we’ll need is ‘just enough’ of all of the facts in that context, both ‘objective’ and subjective. We can illustrate this with a simple SCAN cross-map:

(The skewed red-line on the diagram above indicates that, courtesy of cognitive-bias, facts undergoing analysis can often seem more ‘objective’ than they actually are in the real-world.)

To capture all of the facts we’ll need, we will often need to make explicit efforts to capture and act on those subjective-facts, every bit as much as for the purported ‘objective-facts’. Especially in ‘soft-sciences’ fields such as KM, if we fail to treat objective-fact and subjective-fact as ‘equal-citizens’, we will be setting ourselves up for failure.

The blunt fact here is that feelings are facts. (For more on this, see the posts ‘Feelings are facts‘ and ‘RBPEA: Feelings are facts‘.) And whilst feelings are facts in their own right, opinions about those feelings are not fact – they’re merely opinions. It’s surprising how often people will get this fundamental point the wrong way round…

For KM, we do need distinct disciplines to work with subjective-fact – and they’re not the same as those we use for working with ‘objective’-fact. One of my favourite worked-examples of such disciplines applied in real-world practice is Helen Garner’s ‘The First Stone‘: a careful exploration of her own responses, within a fraught, highly-politicised context, of crucial distinctions such as “what am I ‘supposed to’ feel?” or “what do expect to feel?” versus “what do I actually feel?”. I’d also strongly recommend Nigel Green’s ‘VPEC-T‘ method (Values, Policies, Events, Content, Trust) and/or Sohail Inayatullah’s ‘CLA‘ (Causal Layered Analysis).

Which brings us to the next challenge, namely that methodology itself is inherently problematic. I’ve written a lot on this here over the years, for example in posts such as these:

- ‘Hypotheses, methods and recursion‘

- ‘Declaring the assumptions‘

- ‘On tools and metatools‘

- ‘Theory and metatheory in EA‘

- ‘More on theory and metatheory in EA‘

- ‘On metaframeworks in enterprise-architecture‘

- ‘The art and science of engineering‘

- ‘On sensemaking-models and framework-wars‘

One of the key points that comes up in the last of those posts is a need for metadiscipline in metamethodology – the discipline of discipline in our methodology for assessing methodology. Or, in practice, every methodology we use must be able to be applied to itself, and then applied recursively, with discipline, to itself – because if we don’t do that, we all but guarantee that such usage of that method will fail. One of the more notorious examples is in the common (mis)use of Derrida-style deconstruction in much social-theory – misuse, because the deconstruction is applied to everyone or everything except the social-theory itself. (Try it yourself, to see what happens when you do apply such deconstruction recursively: the insights will likely be, uh, interesting…)

A key concern for KM is that knowledge should be shared – in other words, KM is a social process. In turn, to quote from the post ‘The social construction of process‘, “process is a process that always includes the social construction of its own definition of process” – with all of the inherent complexities and uncertainties that that implies.

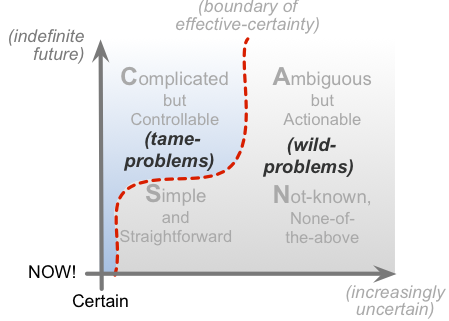

And the omnipresence of further inherent-complexities such as uniqueness, serendipity, ‘coincidensity‘, requisite-fuzziness and variety-weather means that by definition, KM cannot be reduced to a tame-problem. To be useful, it must cover the whole of the context: not just the repeatable, relatively-‘tame’ easy-bits, but all of it, both ‘tame’ and ‘wild’:

Which in turn means that, like enterprise-architecture, KM cannot be a ‘science‘ in the usual tame-problem sense. And hence, as with enterprise-architecture, KM needs methods and methodologies that reflect that fact. To refer back to that Twitter-conversation with which we started here, participant A’s preference for predefined, prepackaged, peer-reviewed, peer-‘approved’ ‘one-size-fits-all’ methods by definition will not fit that need. To me, participant B definitely had the right ideas there: in effect, a minimum requirement to fit the need would be a disciplined metamethodology from which to derive context-specific methods that can still link with each other across the entire context-space.

There are many parallels between the practices for EA and for KM, so there’s a lot of material here on this website that might be useful for participant B’s proposed KM-curriculum and the like – as per the posts via the links in the text above, for example. In a broader sense, and to link it also to the real-world practice of science, some further suggestions might include:

— To counter Gooch’s Paradox (‘things have to be believed to be seen’) and the all-too-natural tendency towards single-viewpoint cognitive-bias, use a systematic process to bridge dynamically between multiple paradigms, perspectives and views – such as the methods described in the post ‘Sensemaking – modes and disciplines‘.

— To tackle real-world complexity and uncertainty, be willing to face up to, and practice, what we might describe as the EA-mantra – a recursive checklist of three phrases, “I don’t know…“, “It depends…“, and “Just enough…“.

— To understand science as practised, read WIB Beveridge’s 1950s classic ‘The Art of Scientific Investigation‘ – particularly its sections on chance, intuition, strategy and the hazards and limitations of reason.

— To understand the real complexities and problematic nature of method, and the need for explicit metamethodology, read Paul Feyerabend’s 1970s classic ‘Against Method‘ – particularly its questioning of the validity and limits of ‘falsification’ in science, and its challenge that ultimately the only valid theory of science is “anything goes”.

Perhaps the simplest summary here is that, for KM and EA alike, we need to open our eyes to the real complexities of the evidence about evidence – because it’s only when we do that that we have any real chance to make anything ‘evidence-based’ in a valid, meaningful and useful way.

Comments, anyone? Over to you if so, anyway.

Leave a Comment

You must be logged in to post a comment.