A designer’s view on AI ethics (part 3 of 3)

Blog: Capgemini CTO Blog

In the previous blog post, I described how we can use value-based design as a method of determining the ethics of artificial intelligence. This method is suitable when we want to sell a product (or service) with AI in it to the market. But when we want to use AI to enhance or business processes, in order to make them more efficient, another method can be used to determine the ethics of the AI.

Let me start with recalling the value-based ethics for product design. When AI is embedded into a product of service – and almost all practical AI is – we should evaluate the ethics of the product itself and the effects of AI on the product as a whole. It is the product the user interacts with, not only with the AI-based components of the product.

When we use AI within a business context, particularly in a business process, we also shouldn‘t look at the AI as some kind of standalone object. The AI is applied within the business process, enabling the goals we‘ve set for that process. Again, AI isn’t used on its own, it’s used within a context – and that context, in this case a process, should be ethical, not just the AI used in that process.

Take this imaginary example: When AI is used to make superior decisions which bank accounts to plunder, the AI as such might do a swell job but the process as a whole is rather unethical.

We take the same approach as we did for the design ethics for products. This means that the values we’ve distinguished for good design and services also apply for business processes. However, we should take a different approach. Products and services should be human-centered, business processes should be business-centered. You can be in the business of designing, making and selling ethical products, but that doesn‘t make your business process, as such, ethical. So, what should we do to create ethical business processes using AI?

Luckily, this is not unchartered territory. Business ethics have been around for some time, and we can reuse the methods and insights gleaned from this discipline to establish the ethicality of the AI applied within the businesses. And yes, creating ethical products is part of an ethical business process.

But there’s more. Let’s first take a quick look at business ethics. According to Wikipedia: “Business ethics refers to contemporary organizational standards, principles, sets of values, and norms that govern the actions and behavior of an individual in the business organization.” Replace “individual” with “AI” and you get a grasp on ethics for AI.

There is no such thing as business ethics. There is only one kind – you have to adhere to the highest standards. (John C. Maxwell, author, speaker, and pastor)

This blog post is not the place to start a mini lecture or course on business ethics. I will venture only into one aspect of business ethics, to give you an insight when the ethics of AI within businesses should be evaluated.

Responsible AI

The ethics of AI is also called responsible AI. Responsible AI is linked to responsible businesses. John Elkington introduced The Triple Bottom Line, a concept that encourages the assessment of overall business performance based on three important areas: profit, people, and planet. Whereas in design ethics the money aspect isn’t very explicit, on a business level making money is the essence of doing business. In determining the ethics, we must look at the economics around the business processes and AI.

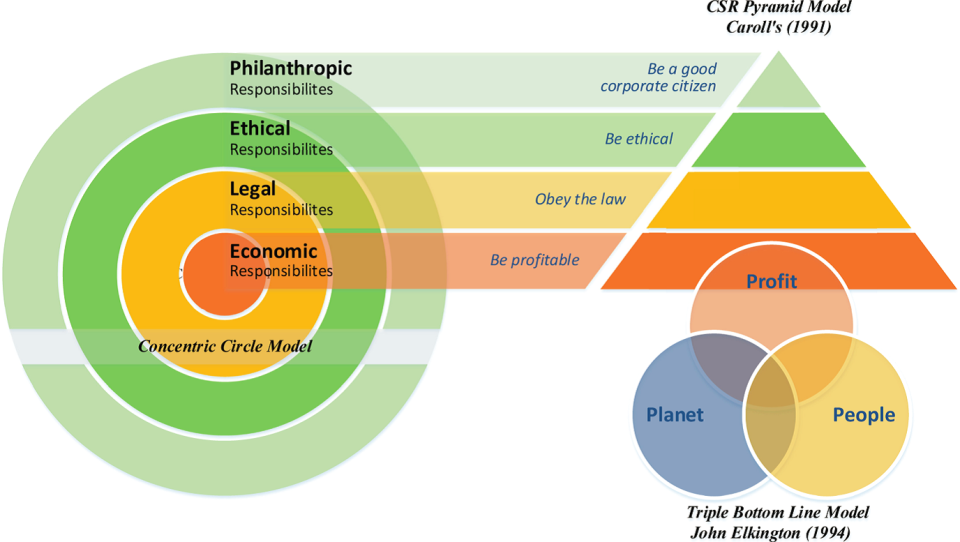

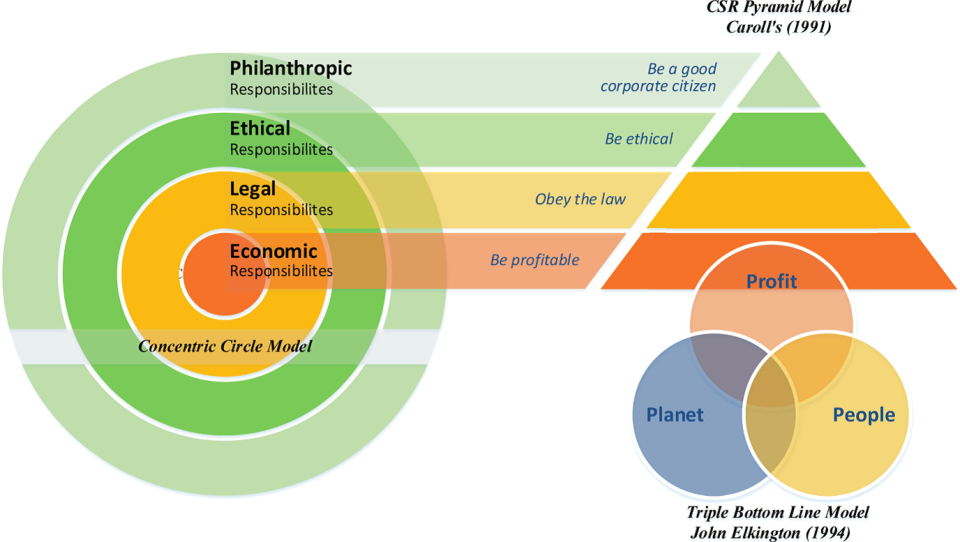

CEDʼs Concentric Circle Model, Carollʼs CSR Pyramid Model, and Elkingtonʼs Triple Bottom Line Model (source: https://ir.unimas.my/id/eprint/18409/)

Organizations often have a set of values or principles which reflect the way they do business or to which they aspire to observe in carrying out their business. As well as business values such as innovation, customer service and reliability, they will usually include ethical values which guide the way business is done – what is acceptable, desirable and responsible behavior, above and beyond compliance with laws and regulations. (source: Institute of Business Ethics)

The CED’s Concentric Circle Model for business ethics places these economical responsibilities at the center. This inner circle comprises factors such as the efficient allocation of resources, provision of jobs, and economic growth. In an AI context, that can be translated into:

- Profitability of the AI business case: what is the ROI on AI in terms of efficiency gains, investment, training, keeping the system up to date?

- Impact on the organization: changes in processes, job losses, retraining of personnel.

- Opening the future: enabling innovation, serving new markets, creating new products or services, becoming an “AI-first” company.

In my experience, the first bullet is also the first hurdle when it comes to starting an effective AI strategy. Most AI business cases don’t make it, because the ROI cannot be established. We cannot assess the effects of AI within business processes with enough precision. And that’s even before the first ethics discussion takes place. However, the economics of an AI solution also depend on the content and foreseen effects of that solution – and the consequences of any solution have to be ethical.

Businesses are expected to operate within the law, thus legal responsibility is depicted as the next circle of the model. Some people think that obeying the law is sufficient to be ethical. But that’s a misconception. Obeying the law is a prerequisite to being ethical – assuming that the law itself is ethical. History has proven that the latter hasn’t always been the case.

Ethical responsibility can be defined in terms of “those activities or practices that are expected or prohibited by society members even though they are not codified into law.” At this level, we can assess the ethics of the business the company is doing. Be aware that in the lower circles ethical aspects are also considered.

Business values

And like with design ethics – described in the previous blog post of this series – these ethics are value-based. The effects, side-effects and other consequences of doing business with people on this planet should be assessed, weighed, and evaluated.

The most common ethical values found in corporate literature include integrity, fairness, honesty, trustworthiness, respect, openness. (Institute of Business Ethics)

Business ethics take a broad view on the whole business of an organization. But in our AI assessment, we should take a closer look at how the use of AI will influence the business processes, and consequently, how these altered business processes will change the way an organization does business.

As an example, I’ve taken a value system by Mark S. Putnam (CEO at Global Ethics, Inc.). I looked how such a system can be used for AI-enabled business processes. (There are many more other good systems.)

- Also called transparency and explainability. AI should be open in how it makes decisions.

- Integrity connotes strength and stability. The AI should keep up with its goals over its lifetime. With learning machines, this implies that we should keep monitoring the system and keep feeding the system with new data so it can stay up to date and relevant.

- It might be difficult to assess the responsibility of the AI system as such, but the people within the organization should use AI in a responsible manner.

- Quality: though most AI systems are used to make processes more efficient, we should take care that the decisions the AI system takes keep up with current standards – and why not use AI to improve the quality of the decisions?

- Trust: Everyone who comes in contact with you or your company must have trust and confidence in how you do business. If you’re using AI, your AI should be trustworthy too. A lot of companies don’t make their AI public because of these trust issues, but that’s not very ethical.

- Respect: We respect the laws, the people we work with, the company and its assets, and ourselves. The AI should also respect the experience and knowledge of their human co-workers. For instance, we allow employees to override decisions made by AI when they deem those decision to be wrong. The other way around, the AI should be sabotaged.

- Teamwork: AI should collaborate with humans and enhance our human capabilities. Replacing humans completely by AI is at this moment not really feasible from an ethical standpoint.

- Leadership: Managers and executives should uphold the ethical standards for the entire organization. A leader should make clear where and when AI is used and be open on the consequences of the AI for the organization and its people.

- Corporate citizenship: Every company should be to provide a safe workplace, to protect the environment, and to become good citizens in the community. The AI shouldn’t make that worse. It should improve the citizenship of the organization.

- Shareholder value. Without profitability, there is no company. AI should contribute to the profitability of the organization.

Look at AI not as a machine, but as a virtual employee. You should set the same standards and requirements for behavior of this virtual employee. The AI should behave in the same manner as an employee – or better, for that matter. If you want your employees to behave responsibly, so should your AI. Don’t take for granted that AI will behave without being instructed to do so. And don’t assume that the supplier of your AI software has made that assessment for you.

I don’t want to discuss the formal (or legal) responsibilities of AI. The question “Who is responsible when my AI makes a wrong decision?” is still unanswered. At the very least, you should be aware of the ongoing discussion. And because you’re going to use an AI system within your specific business context of processes – independent of the way you acquired and trained the AI – you should also take responsibility for the ethical consequences of your AI implementation. The business ethics of your organization will help to assess that specific AI implementation – and keep checking if the AI starts misbehaving over time.

Wrapping things up

In these three blog posts I’ve tried to explain that assessing the ethics of AI is not something you can do without the context of its use. AI will always be deployed as part of a product, service of as a device enhancing business processes. Broadening the scope may seem to make the assessment more complex, but I’m convinced that the large scope will enable us to more precisely gather more precise and context-sensitive values, norms, and requirements.

Using existing frameworks for design ethics and business ethics will help you to answer questions around AI and ethics. These established methods are ready to be used. So don’t let unanswered ethical questions stop you for exploring the possibilities of AI. Because they can be answered. And you can answer them too.

Capgemini believes that using AI will contribute to the profitability of your company, the people using AI directly and indirectly, and the world we live in. So let’s start using AI, in an ethical manner.

For more information on this connect with Reinoud Kaasschieter.

Leave a Comment

You must be logged in to post a comment.